Como transformar dados open-source para treinamento de NLP

Introdução

Bons conjuntos de dados são a base de qualquer inteligência artificial bem-sucedida, especialmente quando se trata de NLP. Um conjunto de dados mal elaborado, tendencioso ou muito superficial resultará em uma IA falha. Por outro lado, conjuntos de dados bem controlados melhorarão os resultados obtidos. Portanto, trabalhar os dados na fase inicial é um elemento fundamental de qualquer desenvolvimento.

Viremos aqui, por meio de um exemplo detalhado, as diferentes etapas que permitem obter conjuntos de dados relevantes e facilmente construíveis e acessíveis.

Objetivo

Nosso objetivo aqui foi obter conjuntos de dados que permitissem treinar nossa IA para automatizar respostas a solicitações de cotações (bids e asks) na P&G expressas por e-mail. Há muitos pontos nos quais é necessário treinar a IA para obter uma IA eficiente: ela não deve apenas ser capaz de reconhecer corretamente RFQ (Request for Quotes) – embora seja esse seu principal objetivo –, mas também reconhecer o que não é RFQ, por exemplo, fórmulas introdutórias, assinaturas (automáticas ou não), conversas informais, e-mails enviados por engano ou mal formulados.

Isso implica a possibilidade de aprender e treinar com noções específicas, e portanto, conjuntos de dados numerosos e ricos. É aí que nosso trabalho é fundamental.

Sobre o conjunto de dados

Para desenvolver uma IA capaz de analisar cadeias de informações e solicitações, a partir de e-mails, foi necessário obter um conjunto de dados relevante para treiná-la. Conjuntos de dados desse tipo não são facilmente fornecidos, pois as empresas do setor financeiro são bastante cautelosas quanto à divulgação de dados.

No entanto, existe um de considerável tamanho, que reúne trocas de e-mails do início dos anos 2000 de uma grande empresa americana do setor de energia.

A empresa tendo falido, todos os e-mails não sensíveis foram reunidos e tornados públicos em um famoso conjunto de dados que já encontrou muitas aplicações no campo de NLP, especialmente na análise de sentimentos.

O conjunto de dados é uma pasta compactada contendo as caixas de entrada de 150 funcionários da empresa: seus e-mails recebidos, enviados e excluídos, sua caixa de saída, etc. Ele contém um total de 517.401 e-mails.

Esses e-mails são tanto e-mails profissionais quanto informais, como os que podem ser trocados entre colegas ou até mesmo e-mails indesejados de fora.

O resultado é um conjunto de dados extraordinariamente rico, mas também repleto de dados que são a priori irrelevantes do nosso ponto de vista (como conversas informais entre funcionários, por exemplo). Portanto, é importante ter um método de classificação muito rigoroso, para que possamos aproveitar ao máximo todos esses dados.

Mais precisamente, o objetivo aqui é gerenciar, depois de processar esses dados, para facilmente constituir conjuntos de dados de aprendizado e treinamento. Era, portanto, essencial recuperar os elementos essenciais que permitissem identificar facilmente os e-mails, para poder classificá-los com facilidade.

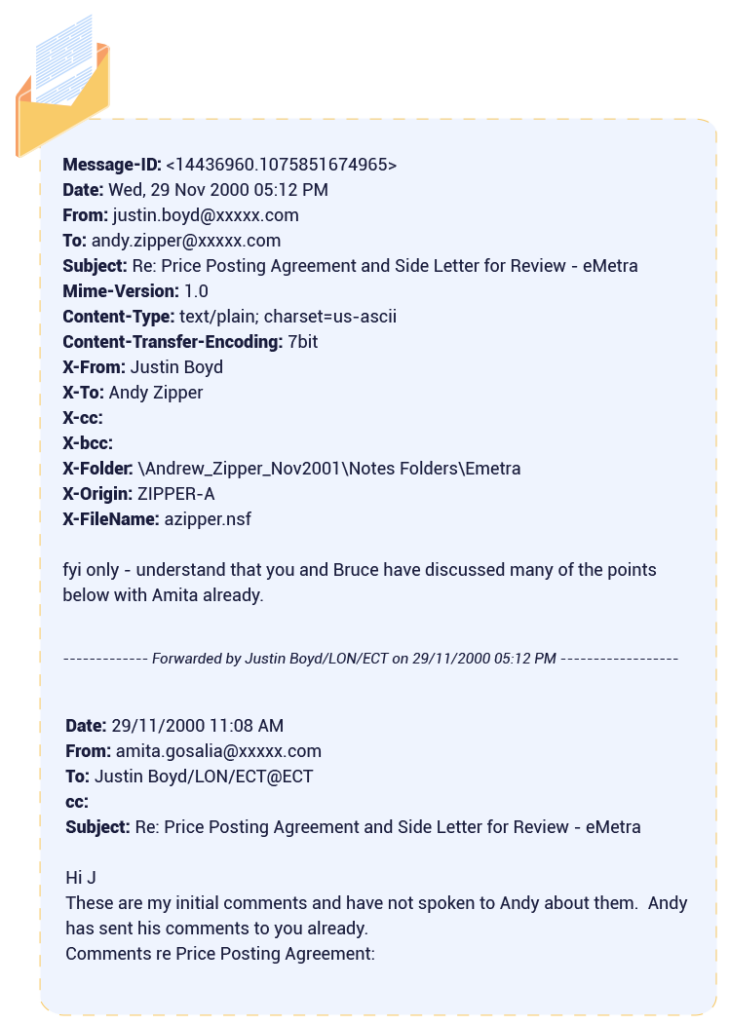

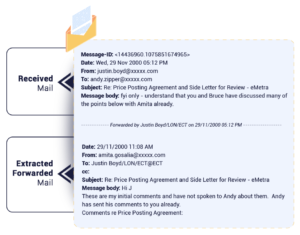

Uma vez descompactado o conjunto de dados (1,5 Giga), e analisado manualmente para realizar uma primeira análise, descobrimos um padrão consistente no formato dos e-mails: alguns de seus atributos sempre estavam no mesmo local:

Dois pontos chamaram nossa atenção:

- Uma migração de e-mails deu errado e “danificou” alguns e-mails, que estão preenchidos com caracteres indesejados. Esses e-mails devem ser identificados e tratados para serem corretamente aproveitados;

- Milhares de e-mails são cadeias de e-mails. Decompor essas cadeias permitiria melhorar o volume e a qualidade do nosso conjunto de dados

O conjunto de dados é, portanto, apresentado com os seguintes volumes:

Após o processamento, isso nos daria um total de 622.791 e-mails.

Processamento de dados

O objetivo principal era ser capaz de criar subconjuntos, de acordo com nossas necessidades específicas, para treinar nossa IA em conjuntos personalizados. O que pode ser feito com um conjunto de dados tão vasto? Imaginamos inúmeros usos relacionados a negócios, como a identificação de nomes de empresas, ações ou reconhecimento de entidades relacionadas ao setor de comércio. Também tínhamos em mente usos mais genéricos: podemos treinar nossa IA para reconhecer saudações, assinaturas de e-mails e tudo o que não seja relevante para os negócios. Esta não é uma lista exaustiva: outros usos podem ser determinados mais tarde, de acordo com nossas necessidades.

Dados com meta-dados claros são facilmente compreendidos e acessíveis. Foi então necessário obter e isolar o máximo de informações possíveis dos e-mails e analisá-los de forma eficiente, para permitir consultas rápidas e eficazes.

A análise foi fácil, porque, como vimos antes, em cada um desses e-mails, estávamos certos de poder recuperar facilmente um identificador associado ao remetente, assunto, destinatário, etc. Isolando esses elementos, podemos realizar uma classificação relevante, tornando os dados fáceis de gerenciar.

Todos os elementos apresentados aqui serão armazenados como atributos completos de e-mails e nos permitirão facilmente vincular os e-mails e reconstruir a cronologia de uma conversa. Podemos destacar a importância do Message-ID. O Message-ID é um identificador único gerado pela ferramenta de gerenciamento de e-mails da empresa. Ele nos permite reconstruir uma cadeia de e-mails a partir de um deles, por exemplo, para dar contexto a um e-mail interessante a partir do conjunto de dados.

A maior parte do trabalho, portanto, consistiu em duas tarefas-chave, ambas voltadas para melhorar a qualidade dos nossos dados:

- A correção de e-mails danificados durante uma migração, agora difíceis de ler para uma IA: cerca de 20.000 e-mails foram “reparados” dos 517.401 que compõem o conjunto de dados;

- O processamento das cadeias de e-mails transferidos e a divisão deles em e-mails independentes, nos permitiu adicionar 2.663 e-mails (com informações atualizadas) ao conjunto de dados

Após o processamento dos dados, obtemos algo como isto para cada e-mail:

Solução de armazenamento

Depois que esse trabalho foi concluído, tivemos que escolher uma solução de armazenamento que nos permitisse construir eficientemente conjuntos de dados relevantes a partir do nosso conjunto de dados original.

Diversas soluções nos foram oferecidas, especialmente na área de NoSQL. Podemos destacar duas principais: MongoDB e Elasticsearch.

Aqui está uma tabela comparativa, mostrando o melhor dessas duas soluções em diferentes áreas:

| Elasticsearch | MongoDB | |

| Pesquisa distribuída | ✅ | ❌ |

| Armazenamento distribuído | ❌ | ✅ |

| Pesquisa de texto completo | ✅ | ❌ |

| Analisadores de pesquisa | ✅ | ❌ |

| Operações CRUD | ❌ | ✅ |

| Ferramenta de visualização | ✅ | ❌ |

Escolhemos o Elasticsearch.

O que selou nossa escolha, além de seu índice de documentos, foi a vasta gama de pesquisas, infinitamente adaptáveis e extremamente flexíveis. Além da noção de “pontuação”, que dá um valor à relevância do resultado encontrado pelo Elasticsearch após uma consulta, o Elasticsearch possui uma poderosa DSL baseada em JSON, que permite às equipes de desenvolvimento construir consultas complexas e ajustá-las para obter os resultados mais precisos de uma pesquisa. Ele também oferece uma maneira de classificar e agrupar resultados.

Além disso, poderíamos usar o Kibana, a ferramenta de visualização do Elasticsearch.

Fácil de usar e configurar, pois é dedicada ao Elasticsearch, ela nos permite obter facilmente uma visão geral dos nossos dados e formular consultas de forma intuitiva para construir e avaliar conjuntos de dados. Isso facilitou a construção dos conjuntos de dados, proporcionando uma melhor compreensão dos nossos dados. Esse é o primeiro alicerce para construir nossos conjuntos de dados de forma eficiente. Finalmente, como não planejávamos modificar o banco de dados, uma das forças do MongoDB foi mitigada.

Para reforçar essa solução e garantir que não deixássemos de lado dados relevantes, várias soluções complementares foram implementadas. É aqui que todos os outros dados que recuperamos entram em jogo: identificador, data, remetente, destinatário. Todos esses dados nos permitem reconstruir uma conversa. Por exemplo, ao confiar no Message-ID de um e-mail, podemos recuperar todos os e-mails que podem ter sido transferidos ou vinculados a esse e-mail. Além disso, saber precisamente os protagonistas e a data de uma conversa nos permite reconstruí-la a partir de um único e-mail.

O trabalho feito na fase inicial combinado com essa solução nos permite aproveitar um conjunto de dados colossal, detalhado e classificado de forma relevante, que nos permite obter qualquer tipo de conjunto de dados adaptado às nossas necessidades.

O trabalho feito na fase inicial combinado com essa solução nos permite aproveitar um conjunto de dados colossal, incrivelmente detalhado e classificado de forma relevante, que nos permite obter qualquer tipo de conjunto de dados adaptado às nossas necessidades.

Graças ao Kibana, podemos visualizar nossos dados facilmente. Assim, bastava adicionar uma pitada de inteligência humana para construir conjuntos de dados adaptados às nossas múltiplas necessidades: reconhecimento de entidades relacionadas ao setor de comércio, nomes de empresas, assinaturas e assim por diante.

Conclusão

Como vimos, muito trabalho de preparação é necessário para obter resultados de qualidade com NLP:

- Primeiro, é essencial obter uma fonte de dados brutos relevantes para o trabalho que você deseja realizar, o que pode ser muito demorado. De fato, é bastante raro encontrar um conjunto de dados que corresponda perfeitamente às suas necessidades. Portanto, às vezes é necessário encontrar conjuntos de dados que não correspondem exatamente ao desejo inicial e reprocessá-los depois. Foi isso que fizemos.

- Também é imperativo pensar na integração inteligente desses dados. Isso tem duas vertentes:

- A primeira é trabalhar esses dados brutos para aproveitar ao máximo: isso pode significar extrair meta-dados (como fizemos aqui), trabalhar os dados para torná-los mais alinhados com nossas necessidades, ou até mesmo excluir dados supérfluos, por exemplo.

- A segunda vertente dessa integração diz respeito ao armazenamento e acesso a esses dados. Como vimos anteriormente, existem muitas soluções disponíveis para isso. Desde as soluções mais clássicas e comprovadas (Oracle SQL…), até as soluções NoSQL mais recentes e flexíveis, como MongoDB ou Elasticsearch. Todas têm suas vantagens, e é importante escolher uma solução alinhada com o que você deseja alcançar.

Feito isso, você tem a base para construir um NLP robusto.

O próximo desafio é construir seus conjuntos de dados e prepará-los para NLP. Isso será abordado em nosso episódio 2, fique atento!